Warum das GPU-Rendering die 3D-Industrie dominieren wird.

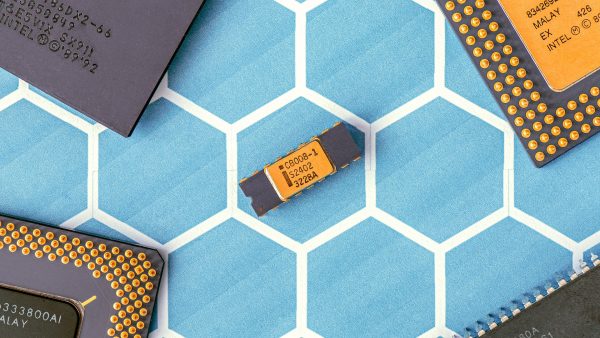

GPUs werden seit Jahren für die Darstellung von On-Screen-Grafiken verwendet, aber ihre Verwendung zur Darstellung von Endergebnissen ist erst jetzt in die Jahre gekommen. Viele gängige Rendering-Pakete haben GPU-basierte Alternativen zu ihrer Flagschiff-Software. Chaos Group stellt eine GPU-basierte Version von V-Ray mit der Bezeichnung V-Ray RT her. Nvidia hat mit iRay eine Alternative zu Mental Ray geschaffen. Eigenständige GPU-Renderer wie Redshift, Octane und Furryball werden ebenfalls immer beliebter.

Das GPU-Rendering, das sich auf den Speicher und nicht auf die Prozessorgeschwindigkeit stützt, kann viel schneller sein als das normale CPU-Rendering. Die Geschwindigkeitssteigerung ist darauf zurückzuführen, wie verschiedene Prozessoren Aufträge verarbeiten. Der Hauptprozessor auf einem Motherboard ist gut darin, ein paar schwierige Berechnungen auf einmal durchzuführen. Stellen Sie sich die CPU als den Manager einer Fabrik vor, der durchdacht schwierige Entscheidungen trifft.

Eine GPU hingegen ist eher wie eine ganze Gruppe von Mitarbeitern in der Fabrik. Obwohl sie nicht die gleiche Art von Berechnungen durchführen können, können sie viele, viel mehr Aufgaben auf einmal erledigen, ohne überfordert zu werden. Viele Rendering-Aufgaben sind die Art von repetitiven, Brute-Force-Funktionen, in denen GPUs gut sind. Außerdem können Sie mehrere GPUs in einem Computer stapeln. Das alles bedeutet, dass GPU-Systeme oft viel, viel schneller rendern können.

Es gibt auch einen großen Vorteil, der lange vor der Erstellung der endgültigen Ausgabe liegt. Das GPU-Rendering ist so schnell, dass es oft Echtzeit-Feedback während der Arbeit liefern kann. Sie brauchen nicht mehr eine Tasse Kaffee zu trinken, während ihre Vorschau gerendert wird. Sie können sehen, wie Material- und Lichtveränderungen vor ihren Augen stattfinden. Also warum wechseln wie nicht alle einfach zum GPU-Rendering und gehen früh nach Hause? Es ist nicht so einfach. GPU-basierte Renderer sind nicht so ausgefeilt wie ihre älteren, GPU-basierten Cousins. Entwickler fügen ständig neue Funktionen hinzu, aber sie unterstützen immer noch nicht alle Tools, die 3D-Künstler von einer Rendering-Lösung erwarten. Dinge wie Displacement, Haare und Volumenmessung fehlen oft in GPU-basierten Engines. Das größte Problem beim GPU-Rendering kann die Art und Weise sein, wie Grafikprozessoren eine Szene verarbeiten.

Die All-in-One-Funktion des GPU-Renderings erfordert, dass eine ganze 3D-Szene in den Speicher geladen wird, um zu funktionieren. Große Szenen mit Tonnen von Polygonen und vielen hochauflösenden Texturen werden für einige GPU-basierte Lösungen einfach nicht funktionieren.

Es ist auch eine Lernkurve. Viele GPU-Renderer benötigen einige Materialien, Shader und Lighting. So können Szenen, die für das GPU-basierte Rendering eingerichtet sind, nicht einfach auf einen GPU-Renderer umgestellt werden, auch wenn das gleiche Unternehmen die Software herstellt. 3D-Künstler müssen zu Beginn des Projekts entscheiden, welchen Workflow sie verwenden möchten.

Wird das GPU-Rendering jemals den Anschluss an CPU-basierte Software finden? Wird es die 3D-Industrie dominieren? Die Zeit wird es zeigen. In der Zwischenzeit ist der beste Weg, um schnell zu rendern und trotzdem die erweiterten Funktionen des CPU-Renderings zu genießen, die Verwendung einer Cloud-Lösung wie Rayvision.

Wir hoffen, dass wir ihnen mit diesem Beitrag einen Überblick über die zukünftige Entwicklung des GPU-Renderings geben konnten. Falls Sie Anmerkungen oder Fragen haben sollten, hinterlassen Sie uns unten einen Kommentar.

Vielen Dank für ihren Besuch.