Einsteigerguide: Was sind Renderings?

Wenn Sie sich erst seit kurzer Zeit mit 3D beschäftigen, haben Sie sich vielleicht schon gefragt, was genau unter Rendering zu verstehen ist.

Eine Analyse des Begriffs aus mathematischer und wissenschaftlicher Sicht würde hier den Rahmen sprengen. Deshalb werden wir uns im Folgenden mit der Rolle des Renderings in der Computergrafik näher beschäftigen.

Der Prozess weist Analogien zur Filmentwicklung auf.

Rendering ist der technisch aufwendigste Aspekt der 3D-Produktion, lässt sich aber im Kontext einer Analogie durchaus gut nachvollziehen: So wie ein Filmfotograf seine Fotos entwickeln und drucken muss, bevor sie angezeigt werden können, so sind Computergrafiker einer ähnlichen Notwendigkeit ausgesetzt.

Wenn ein Computergrafiker an einer 3D-Szene arbeitet, sind die von ihm manipulierten Modelle eigentlich eine mathematische Darstellung von Punkten und Flächen (genauer gesagt Ecken und Polygone) im dreidimensionalen Raum.

Der Begriff Rendering bezieht sich auf die Berechnungen, die von der Render-Engine eines 3D-Softwarepakets durchgeführt werden, um die Szene von einer mathematischen Approximation in ein fertiges 2D-Bild zu übersetzen. Dabei werden die räumlichen, texturalen und lichttechnischen Informationen der gesamten Szene kombiniert, um den Farbwert jedes Pixels im abgeflachten Bild zu bestimmen.

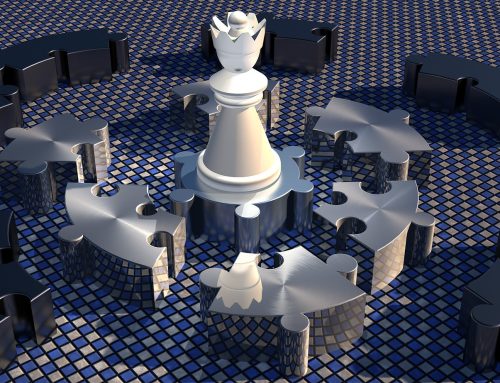

Das folgende Bild veranschaulicht die computergestützte Nachbildung eines Bentleys:

Man unterscheidet zwei verschiedene Rendering-Arten:

Es gibt zwei Hauptarten des Renderings, deren Hauptunterschied die Geschwindigkeit ist, mit der Bilder berechnet und entwickelt werden.

Echtzeit-Rendering wird am häufigsten in Spielen und interaktiven Grafiken verwendet, wo Bilder aus 3D-Informationen mit unglaublich hoher Geschwindigkeit berechnet werden müssen.

- Interaktivität: Da es unmöglich ist, genau vorherzusagen, wie ein Spieler mit der Spielumgebung interagieren wird, müssen die Bilder in „Echtzeit“ gerendert werden.

- Fragen zur Geschwindigkeit: Damit Bewegung flüssig erscheint, müssen mindestens 18 – 20 Bilder pro Sekunde auf dem Bildschirm angezeigt werden. Alles andere würde nicht optimal aussehen.

- Die Methoden: Das Echtzeit-Rendering wird durch dedizierte Grafikhardware (GPUs) und durch die Vorkompilierung von möglichst vielen Informationen drastisch verbessert. Viele der Beleuchtungsinformationen einer Spielumgebung werden vorberechnet und direkt in die Texturdateien der Umgebung umgesetzt, um die Rendergeschwindigkeit zu erhöhen.

Offline-Rendering oder Pre-Rendering: Offline-Rendering wird in Situationen verwendet, in denen die Geschwindigkeit weniger problematisch ist und Berechnungen in der Regel mit Mehrkern-CPUs und nicht mit dedizierter Grafikhardware durchgeführt werden.

- Vorhersagbarkeit: Offline-Rendering wird am häufigsten in Animationen und Effekten eingesetzt, bei denen visuelle Komplexität und Fotorealismus einen viel höheren Standard haben. Da es keine Unvorhersehbarkeit darüber gibt, was in jedem Einzelbild erscheinen wird, sind große Studios dafür bekannt, bis zu 90 Stunden Renderzeit für einzelne Einzelbilder aufzuwenden.

- Fotorealismus: Da das Offline-Rendering innerhalb eines offenen Zeitrahmens stattfindet, können realistischere Bilder erzeugt werden als beim Echtzeit-Rendering. Zeichen, Umgebungen und zugehörige Texturen und Lichter sind normalerweise in höheren Polygonzahlen und Texturdateien mit einer Auflösung von 4k (oder höher) erlaubt.

Man unterscheidet drei verschiedene Rendering Techniken.

In der Regel kommen in der Praxis drei verschiedene Rendering-Techniken zum Einsatz, die im Folgenden vorgestellt werden. Jeder hat seine eigenen Vor- und Nachteile, so dass alle drei Optionen in bestimmten Situationen realisierbar sind.

Scanline Rendering ist eine gute Wahl, wenn die Renderings möglichst schnell erstellt werden sollen. Anstatt ein Bild pixelweise zu rendern, berechnen Scanline Renderer auf Polygon-Basis. Scanline-Techniken in Verbindung mit vorberechneter (gebackener) Beleuchtung können auf einer High-End-Grafikkarte Geschwindigkeiten von 60 Bildern pro Sekunde oder besser erreichen.

Beim Raytracing werden für jedes Pixel der Szene ein oder mehrere Lichtstrahlen von der Kamera zum nächsten 3D-Objekt verfolgt. Der Lichtstrahl wird dann durch eine festgelegte Anzahl von sogenannten „Bounces“ geleitet, die je nach Material der 3D-Szene Reflexion oder Brechung einschließen können. Die Farbe jedes Pixels wird algorithmisch berechnet, basierend auf der Interaktion des Lichtstrahls mit den Objekten in seinem zurückverfolgten Pfad. Raytracing ist in der Lage, mehr Photorealismus als Scanline zu erzeugen, ist aber exponentiell langsamer.

Im Gegensatz zum Raytracing wird die Radiosity unabhängig von der Kamera berechnet und orientiert sich nicht pixelweise, sondern oberflächenorientiert. Die primäre Funktion der Radiosity besteht darin, die Oberflächenfarbe genauer zu simulieren, indem indirekte Beleuchtung (gepreßtes diffuses Licht) berücksichtigt wird. Die Radiosity ist typischerweise durch sanfte, abgestufte Schatten und Farbverbluten charakterisiert, bei denen das Licht von farbigen Objekten auf die nahegelegenen Oberflächen „blutet“.

In der Praxis werden Radiosity und Raytracing oft kombiniert eingesetzt. Auf dieser Basis lassen sich beeindruckende und fotorealistische Renderings erzeugen.

Die am meisten genutzten Rendering-Engines.

Obwohl das Rendern auf unglaublich ausgeklügelten Berechnungen beruht, bietet die heutige Software leicht verständliche Parameter. Auf dieser Basis muss sich ein Nutzer nicht mit der zugrundeliegenden Mathematik auseinandersetzen um fotorealistische Ergebnisse erzielen zu können. Eine Render-Engine ist in jedem 3D-Softwarepaket enthalten, und die meisten von ihnen enthalten Material- und Beleuchtungspakete, die es ermöglichen, beeindruckende Fotorealismus-Werte zu erreichen.

Mental Ray (Autodesk Maya) ist unglaublich vielseitig, relativ schnell und vermutlich der beste Renderer für Charakterbilder, die unterirdische Streuungen benötigen. Mental Ray verwendet eine Kombination aus Raytracing und „Global Illumination“ (Radiosity).

V-Ray hingegen wird typischerweise zusammen mit 3ds Max verwendet. Diese Kombination ist absolut konkurrenzlos für Architekturvisualisierungen und Umgebungsrenderings. Die Hauptvorteile von V-Ray gegenüber den Alternativen sind die Lichtwerkzeuge und die umfangreiche Materialbibliothek für arch-viz.

Dies war nur ein kurzer Überblick über die Grundlagen zum Thema Rendering. Es ist ein technisches Thema, aber es kann sehr interessant sein, wenn man sich einige der gängigen Techniken genauer anschaut.

Vielen Dank fürs Lesen.

2 Kommentare

Comments are closed.

Guter Text. Danke

Alles super, leider stört mich wie bei vielen anderen die Definition von „Scanline Rendering“. Historisch ist „Scanline Rendering“ doch etwas anderes als „Rasterizing“. Scanline Rendering arbeitet Bildzeile für Bildzeile und sucht nach Schnitten zwischen der Bildzeile und den bereits transformierten Polygonen. Bekannte Scanline-Renderer waren z.B. 3D-Studio-(MAX)-Renderer oder Caligari’s Truespace Renderer. Rasterizing arbeitet hingegen auf Polygonbasis. Der bekannteste Rasterizing-Renderer ist z.B. der Renderer welcher in Lightwave bis zur Version 9 verbaut wurde. Jede der Technologien hat seine Vor- und Nachteile (gehabt).