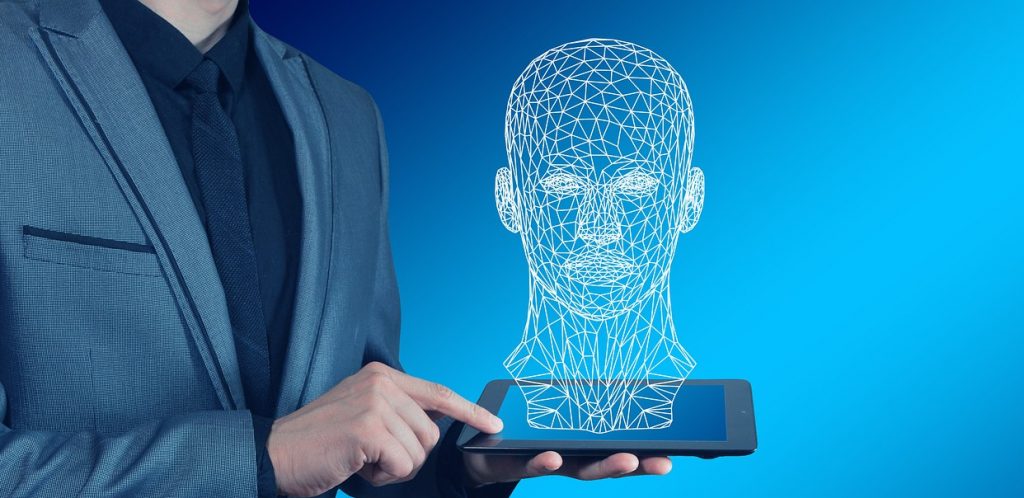

How 3D faces can be developed for video games.

A research team from a Chinese gaming manufacturer, has developed a system that can automatically extract faces from photos and use the image data to generate in-game models. We have summarized the results of the paper entitled “Face-to-Parameter Translation for Game-Character Auto-Creation” below.

More and more game developers are choosing to use AI to automate time-consuming tasks. For example, game developers use AI algorithms to represent the movements of characters and objects. Another current application of AI by game developers is the development of more powerful character customization tools.

Character customization is a popular feature of role-playing games that allows players of the game to customize their player avatars in a variety of ways. Many players choose to make their avatars look like themselves, which is becoming increasingly achievable as the complexity of character customization systems increases. However, as these character creation tools become more complex, they also become much more complex. Creating a character that looks like yourself can take hours of customizing sliders and changing cryptic parameters by developing a system that analyzes a photo of the player and creates a model of the player’s face.

The automatic character creation tool consists of two halves: an imitation learning system and a parameter translation system. The parameter translation system extracts features from the input image and creates parameters for the learning system to be used. These parameters are then used by the imitation learning model to iteratively create and improve the input screen display.

The imitated learning system has an architecture that simulates the way the game engine creates character models with a constant style. The imitation model is designed to extract the perception of the face, taking into account complex variables such as beards, lipstick, eyebrows and hairstyle. The face parameters are updated by the process of gradient decay, compared to the input. The difference between the input features and the generated model is constantly checked and optimizations are made to the model until the in-game model matches the input features.

After the mimic tool has been trained, the Parameter Translation System checks the outputs of the mimic network against the features of the input image and decides on a feature space that allows calculation of the optimal face parameters.

The biggest challenge was to ensure that the 3D character models could preserve details and appearance based on human photographs. This is a cross-domain problem where 3D and 2D images of real people are compared and the core features of both must be the same.

The researchers solved this problem with two different techniques. The first technique was to split their model training into two different learning tasks: a face-content task and a discriminatory task. The general shape and structure of a person’s face is recognised by minimising the difference/loss between two global appearance values, while discriminatory/fine details are filled in by minimising the loss between things like shadows in a small region. The two different learning tasks are merged into a complete representation.

The second technique used to create 3D models was a 3D surface construction system using a simulated skeletal structure and consideration of bone shape. This enabled the researchers to create much more sophisticated and accurate 3D images compared to other 3D modeling systems based on grid or face meshes.

The creation of a system that can create realistic 3D models based on 2D images is impressive enough in itself, but the automatic generation system does not only work with 2D photos. The system can also take sketches and caricatures of faces and display them as 3D models with impressive accuracy. The research team suspects that the system is capable of generating accurate models based on 2D because the system analyzes facial semantics rather than interpreting raw pixel values.

While the automatic character generator can be used to create characters based on photographs, the researchers say that the user can also use it as a complementary technique and further edit the created character according to his preferences.

Thank you very much for your visit.